Consumers today count on user-generated content when shopping for products online. This includes review imagery posted by unbiased shoppers. In fact, for many, the content posted by fellow consumers offers the best validation and is vital to selecting the right items for the home when shopping online.

But what if you're a blind or low vision (BLV) consumer? According to the CDC, approximately 12 million people 40 years and over in the United States have a vision impairment. Many of these people turn to screen readers when shopping online. These devices read out content on web pages, including descriptions of images, also known as alternative text (alt-text). However, most sites provide minimal to generic alt-text for review imagery. In other words, they don't offer the shopper a good sense of what the reviewer has shared.

We decided to do something about this by creating a prototype called AIDE, which stands for Automatic Image Description Engine. AIDE uses computer vision and a natural language processing (NLP) model developed by Wayfair to automatically generate alternative text for review imagery on the Wayfair site, which BLV people will hear while shopping.

We recently submitted a technical paper about AIDE to the ACM Web4All conference, which focuses on all aspects of web accessibility. I'm happy to report that we were accepted to present our story. Here's a snippet of what we shared.

How This Project Started

Before diving into this project, we had several discussions with Wayfair's legal and accessibility teams. Our goal was to understand what problems BLV people experience when they are on a retail site, specifically when it comes to imagery, which is vital to having a good experience.

After some discussion, we refined our focus to the imagery included in product reviews rather than the pictures featured on product pages. Product page images are usually accompanied by a wealth of other product information such as the material description, color, size, and more. BLV shoppers may get a good sense of what is in these staged photos from this product information. However, because review imagery is user-generated content, uploaded images can vary dramatically depending on how the reviewer has staged their home, for instance. Additionally, typical alt-text included with review images tends to feature non-descriptive wording, such as "customer image" or "summary of review."

The focus on review imagery made this project a more significant undertaking, and here's why—with review imagery, shoppers are trying to understand how the context of another person's home translates to their own. This meant that we needed to understand this visual information and then convey it from a blind user's perspective. Next, we would need to merge this generated image text with any accompanying review text to create a cohesive description that would help shoppers.

What is the Biggest Challenge?

Before taking our next step, we needed to fully understand what to build. This required that we speak with BLV individuals. Our goal was to gain a complete understanding of their perspectives on review imagery and what content we could provide that would be most useful to them.

We connected with BLV individuals through Zoom and interviewed them for an hour about their current challenges and needs while shopping online for home items. Here's what they told us—they use screen readers, which at the most basic level, go to a website and read what's on it. Some of these readers are smart and know how a web page is structured, can read out the critical texts, and help people to navigate the page.

However, BLV individuals found that review imagery was lacking the necessary information -- good alt-text -- to help them with their shopping experience. According to research from Bazaarvoice, 66 percent of surveyed sighted users felt that review photos played an important role in helping them make purchasing decisions, and 75 percent stated they preferred user-submitted content over staged imagery as it lends products an air of authenticity.

Based on these empirical user studies, we found that this research could be extended to BLV shoppers—all of those we spoke to reported that they look through reviews while shopping online. At this point, we needed to drill down and determine exactly what they wanted out of review imagery and, more specifically, what information was most important to them. The top answers were the following:

- Product’s color

- Room’s setting

- Image overview

- Product’s unique features.

Now it was time to move forward to build the prototype.

What makes AIDE tick?

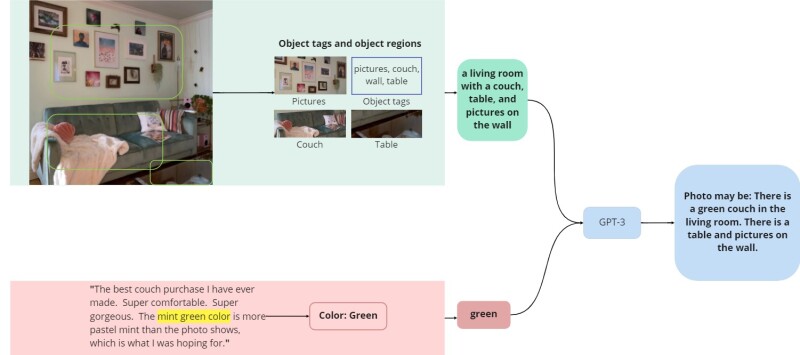

There are three main components to AIDE: scene description, text processing, and generating human-readable output.

- Scene Description: This is an image processing algorithm that tries to describe what is happening in an image using machine learning and object detection. It could be a living room, kitchen, you name it. For example, with a living room image (see below), the object detection component would say, "living room, couch, table, pictures on the wall." This output is then fed into the image captioning model, which using a Voice and Language pre-training method, would create a human-readable sentence: “a living room with a couch, table, and pictures on the wall.”

- Text Processing Algorithm: Thanks to the Data Science team, the majority of this solution was already implemented! This component focuses on the review comments accompanying the image. The algorithm parses semantically relevant keywords to find product-specific features. For example, if the reviewer left a comment saying: “The mint green color is more pastel mint than the color shows,” the algorithm would output “green” as it determined that the color green was an important feature of the product.

- Human-Readable Output: The final step is using GPT-3 to generate human-readable output from scene description and review comment parsing. It essentially serves the role of a copy editor, taking the output from the scene and text descriptions to create a concise sentence that is easy to understand.

As I said above, these three pieces of technology already exist. All that needed to be done was to stitch them together and make some tweaks to generate a useful output.

Here's what that output looks like today.

How has it been received?

After 3 to 4 months of work, we were ready to conduct another user study with the prototype, and we were thrilled with the feedback. Some of the comments we received include:

- More than 83% of people agreed that online shopping at Wayfair will be more accessible with this alt-text.

- 15 out of the 18 participants were likely or very likely to recommend turning on AIDE to other BLV people.

- The majority of participants noted how AIDE made them feel more independent, saying, "I can get all the information I need and don't have to rely on other people to make an informed decision about what I'm trying to buy."

The journey of taking AIDE from an idea to a working solution has been hugely satisfying, and the Web4All Conference was the ideal venue for us to share this story. As with many other innovations, AIDE would not have happened if it wasn't for Wayfair. Since its earliest days, the company has harvested a culture of innovation and encouraged its technologists to explore uncharted territories to find new ways of improving the online shopping experience for everyone.

If driving new industry innovations and transforming the online shopping experience is the opportunity you have been looking for, the Wayfair Technology team may be for you.