At Wayfair, we want to recommend the right products to customers so that they can find what they are looking for. However, customers may change their preferences over time, both based on external factors (e.g. customers may shift towards more premium materials if their purchasing power increases) and in-house factors (e.g. they may see a new kind of design/material/style on Wayfair that they really like, but were previously unaware of). Moreover, customers may look for similar characteristics (e.g. value, style) across different types of furniture that they buy. In this post, we will present a new Multi-headed Attention Recommender System (MARS) that uses sequential inputs to learn changing customer tastes, and hence provide recommendations that better match customers’ latest preferences.

Motivation for MARS Architecture

Wayfair’s catalog is constantly changing - and so are customer tastes. Moreover, when customers change their preferences for a certain type of furniture (e.g. different material, style or value), it is likely that their new preferences will carry over to other product types that they may order in the future. If we want our models to be able to adapt to customers’ changing preferences, we need to use a model architecture that can handle sequential input data. For example, a customer may, after browsing some types of bed (e.g. traditional wooden beds) decide they actually want a different style (e.g. modern metal/wood hybrid beds). However, because the bulk of their browse history was traditional beds, a model that does not take into account the sequence of their browse history will primarily recommend traditional beds, as it cannot distinguish more recent customer preferences.

A transformer network is very well-suited to solve this problem. Because they can handle sequential inputs via self-attention, they can easily learn changes in customer preferences. Unlike other sequential models like recurrent neural networks, transformers can be parallelized and hence train much faster. MARS is a transformer network largely based on an open-source recommender model called SASRec.

The input to MARS is very simple - just a sequence of items that a customer has browsed. We do some cleaning to remove very rarely-encountered items, and also remove adjacent duplicate SKUs (so itemA → itemB → itemB → itemC → itemA becomes itemA → itemB → itemC → itemA).

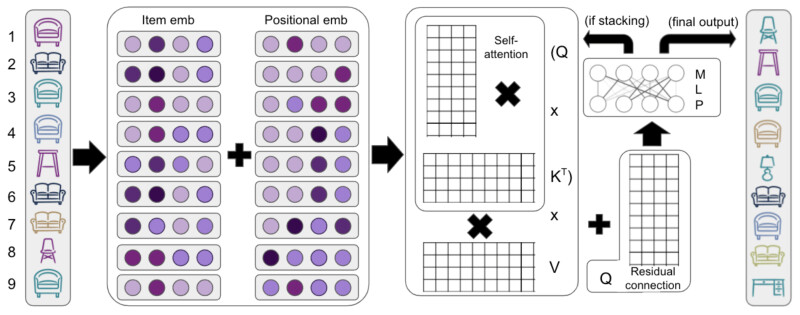

MARS (Fig. 1) uses self-attention to learn similarity between different products, which is stored in an item embedding. Similarly, the positional information (whether the product is viewed first, second, third etc.) is stored in a positional embedding. This has the same dimensionality as the item embedding so that the embeddings can be added together. We could also concatenate them but this would lead to much greater model complexity (hence longer training time). The positional embeddings need not be learned; the original transformer paper used predefined positional embeddings, though subsequent work (e.g. BERT) found better performance when using learned positional embeddings. The resulting summed embeddings are passed through self-attention layers and a final fully-connected layer with sigmoidal activation and binary cross-entropy loss. A residual connection after each attention block prevents overfitting. The final output is a list of scores for all items in the training set; these can be ranked to provide the top n recommendations. Note that the recommended SKUs may include both items that were previously viewed, as well as new items in previously-unseen classes of products (e.g. desks and table lamps in Fig. 1)

To prevent overfitting, MARS uses standard dropout and L2-regularization methods. To keep the gradients stable during backpropagation, we use layer normalization and a residual connection.

Results

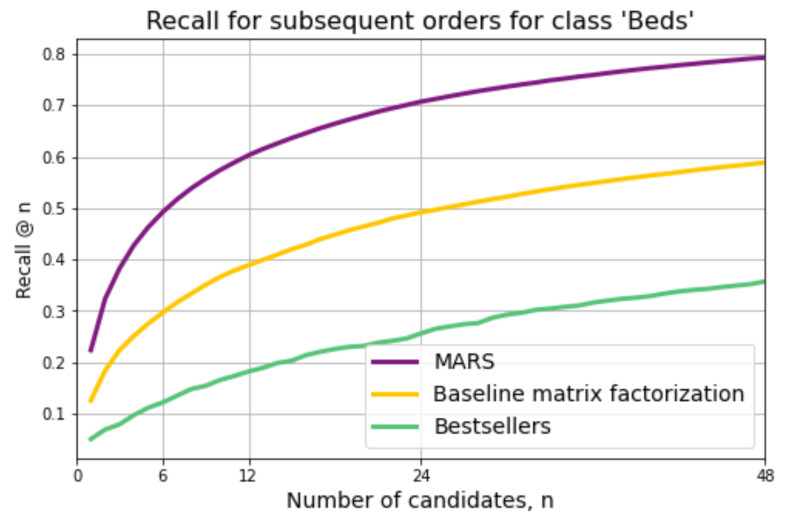

1. MARS lift for substitutable recommendations by learning sequential behavior

We first try to use MARS with product views from just one class (here, beds), and compare against a baseline matrix factorization method that uses the same training data as MARS, except it is aggregated and not sequential. Adding positional information could help the model adapt to changing customer preferences, as more recent views may be better indicators of a customer’s current preferences. In Fig. 2, we see that even though MARS is able to greatly improve the recall (proportion of successful recommendations over all targets) by 67%. This suggests that the positional information is, in fact, very useful for making more relevant recommendations.

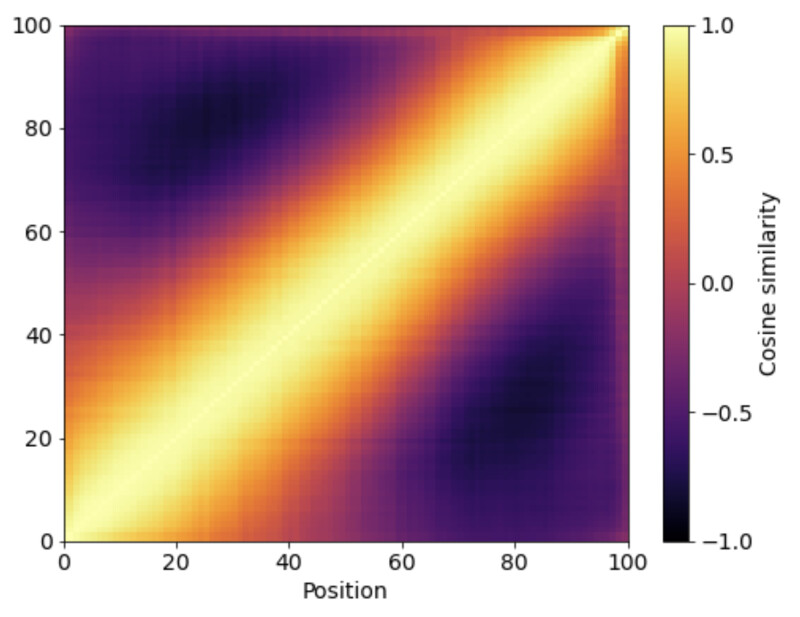

As a further check on this, we can look at the learned positional embeddings to see how they converged (Fig. 4). We can clearly see that positions that are nearer have more similar embeddings. This is exactly what we want to see, as it shows that the sequence is important and relevant. The fuzziness of the diagonal line in Fig. 4 shows that the sequence is robust to slight perturbations, which is good as if we switch two adjacent items from a long sequence, we should not expect to have vastly different recommendations. We can also see that the fuzziness decreases as the sequence approaches the present (position 100 is the most recently-viewed item). Customers who have viewed more than 100 items are truncated to the most recent 100; customers with fewer than 100 views are 0-padded to 100.

2. Transferability of learned customer style preferences

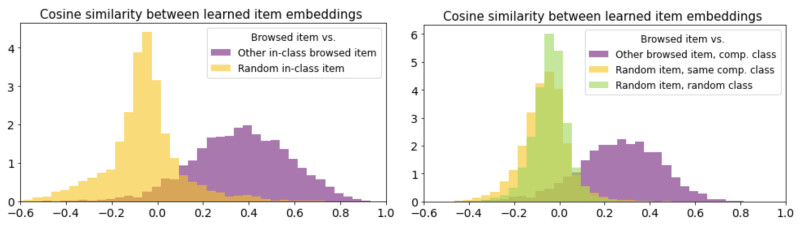

Because customers commonly browse certain types of classes together, we can expect the learned item embeddings to reflect this. Indeed, in Fig. 4 we can see that commonly co-ordered classes (e.g. beds and nightstands) and co-viewed classes (e.g. sofas and sectionals) are clustered together, even though there is no class information provided at training. However, within each class, are similar items (e.g. similar style) also being grouped together? This means we want to see whether MARS’ learned item embeddings can learn to connect not only similar items within the same class, but also similar items for unrelated classes.

For commonly co-viewed, or “substitutable” classes (e.g. sofas and sectionals) they have very similar item embeddings and so customer preferences are very easy to transfer, as MARS may well be learning more tangible features like color, shape, size and price instead of style. For commonly co-ordered, or “complementary” classes (e.g. sofas and coffee tables) there are fewer common features like material, shape, or size to transfer, and so if we can show customers are browsing similar complementary items, then this is much more likely to demonstrate style transfer.

However, because the class signal is so strong, this means it is hard to compare browsed items with random items if they are all from the same class, as the cosine similarity will be similarly high in both cases. To rule this out, we can subtract the mean embedding for each class, which should remove the class signal and leave behind other item characteristics (e.g. style or value). After doing this, we can see in Fig. 5 that co-browsed items are much more likely to have similar characteristics, even when excluding the class signal, which strongly hints that MARS is learning transferable customer preferences.

Fig. 5. Similarity of the demeaned embeddings are higher when comparing co-browsed items than a random item from the same class (left); similarly, the cosine similarity for co-browsed complementary items is also higher than a random item (either from the same complementary class or from a random class). This suggests that MARS can learn customer preferences that carry over to other browsed classes. These preferences may be style, value, or other item characteristics.

Conclusion

By using a transformer model, we are able to take a very simple input - a list of browsed items, with no other information - and greatly improve on the success rate of recommendations served up on the main browse pages for each class. For customers with browse history in that class, our MARS model dramatically increases the accuracy of our recommendations by ~67%. For customers without any browse history in that class, we have shown that MARS is able to learn transferable item characteristics (e.g. style) that carry across from previously browsed classes. Future iterations of this model will include more complex inputs, such as customer demographic information and product image embeddings, which should further improve our recommendation quality. MARS is a standout star of our recommendation models and certainly has a very bright future!