Machine learning (ML) profoundly transformed the digital shopping experience for millions of people worldwide. We’ve seen this at Wayfair, where our teams are continuously delivering intelligent and improved experiences for our customers by integrating ML at every stage of their journey with us. Right now Wayfair is leveraging hundreds of ML applications, which on the outside are improving marketing campaigns, improving the visual merchandising of the products we sell, personalizing product recommendations, enabling high-quality customer service, and more.

Behind these experiences are complex ML applications and a suite of purpose-built tools, services, and platforms managed by internal groups such as the ML Platforms team. That’s where we work and help in our mission to ensure that transitions between each stage of the ML lifecycle are smooth, standardized, and automated.

As Wayfair’s business and customer base grew, our mission became more complex and we quickly realized that the infrastructure in place was insufficient. What we needed was an alternative path forward to outpace our growing demands. Here’s our story.

The Beginning

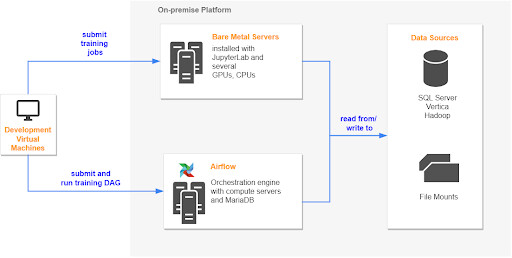

Previously, we relied largely on a shared on-premise infrastructure for model development and training needs (Figure 1).

As recently as last year, our users, data scientists, and machine learning engineers performed local development on dedicated virtual machines. These machines were constrained while running computationally expensive training jobs such as large Spark jobs, and often led to failures and out-of-memory challenges.

The alternative was to use shared bare-metal machines configured with terabytes of RAM, several GPUs, and several hundred cores. Unfortunately, these shared machines presented issues:

- Users would frequently encounter resource contention and these issues were magnified due to ‘noisy neighbors’ who would over-provision their jobs or run suboptimal code claiming most of the resources.

- The machines were not easily scalable, and procuring additional compute required long lead times that limited the development velocity for our user community.

Hello Google!

In recent years, Wayfair embarked on a journey to adopt Google’s Cloud Platform (GCP) for infrastructure, storage, and now ML capabilities (for more details please see The Road to the Cloud blog). The process to transform ML platforms began with an evaluation of Google’s model training product. The offering not only integrated well with the rest of our GCP ecosystem (BigQuery, Cloud Storage, Container Registry, etc), but also offered scalable, on-demand computations. Moreover, piggybacking on the existing GCP infrastructure and permission control would allow our team to move faster to delivery.

Our Mandate

Our mandate was to offer an end-to-end solution that included tools to train models at scale while delivering an efficient and intuitive experience. This required the following:

Well-Integrated with In-House Solutions

We offer users a range of solutions that serve the full end-to-end ML lifecycle. These include capabilities for storing models and features, serving models and more. We needed to ensure these would integrate with the new offering. To do so, we expanded the existing libraries to make them easier to use by automatically storing models after training runs, as well as fetching features from the feature library into a data frame.

Usable and Accessible From Airflow

Airflow is our primary orchestration engine for ML and other data engineering workflows. To enhance developer velocity and improve usability, we created several custom Airflow operators to

- Easily submit training jobs to Google’s training product from within an Airflow DAG. This light wrapper around the gcloud tooling enabled users to seamlessly migrate to the new training platform, all while maintaining separation between orchestration and compute.

- Secondly, we authored an operator to save and retrieve trained models from in-house model storage platform as well as connecting to saved features or trained datasets from the Wayfair’s feature library.

- To speed up the DAG authoring step, we implemented another Airflow operator that would automatically generate code and helper methods for common operations such as submitting training jobs, parsing the output from those jobs, and moving/copying data into Google’s Cloud Storage.

Compatible with Multiple Distributed ML Frameworks

Our users utilize a variety of distributed frameworks to process computationally-intensive jobs. Google’s training platform provides out-of-the-box support for TensorFlow and PyTorch. To further accelerate our users’ ability to scale easily on the cloud, we expanded this by setting up pre-configured Horovod and Dask clusters. These would accommodate general-purpose and deep learning training jobs. By preconfiguring containers, users could then easily connect to the Dask or Horovod cluster, without worrying about the engineering complexities needed for the cluster setup.

Highly Scalable and Offer a Range of Compute Options

Unlike the on-premise setup, Google’s product offering gives teams fast access to the desired CPU, RAM and GPU specifications in an isolated and on-demand fashion. As a result, they can now select from a wide range of hardware setups that include the customizability needed to scale out to multi-gpu training.

Customizable for Advanced Users

To enable better portability and reproducibility of training, we offered our users custom container workflows. These let them submit training jobs that run on their custom containers. Users can author a dockerfile to configure the container, and manage dependencies needed to run the training application, and this file could be saved in Google’s Container Registry.

Analytics to Understand Usage and Track Costs

To better understand how our users leverage the product, we also implemented a standard tagging schema. This would let us capture metadata about each training run. We also leveraged Google’s built-in logging and consolidated the default and custom data to present a complete view of the users and their training jobs. We have been successfully leveraging this information for cost tracking and to better monitor internal adoption.

Below is a schematic of the solution we delivered to our users that is well-integrated with the Wayfair ecosystem.

Proof of Success

Recently, Matt Ferrari, Head of MarTech & Data & ML Platforms, spoke at the Google Next 2021 conference and shared our early wins using this new AI Platform Training solution “...we have a lot of very large training jobs that are now five to ten times faster at this point and we have gotten feedback from our data scientists that they specifically like all of the hyperparameter tuning available to them.”

Matt was referring to users such as Wayne Wang. Wayne leads the data science team that develops classification-based models that help remove duplicates and improve Wayfair’s product catalog. Wayne’s team was able to achieve higher development velocity by leveraging custom operators and on-demand compute while performing hyperparameter tuning in parallel.

Other teams have since built upon these solutions, including Andrew Redd. Andrew works on the data science team which powers Wayfair’s competitive intelligence algorithms. Using the new GCP-powered framework, the team was able to quickly scale hundreds of product category-level XGBoost models, reducing their computational footprint by roughly 30 percent. They did this by provisioning the right size of machine for each product category. This replaced the one-size-for-all constraints experienced in Spark jobs.

What's Next

While the team has already experienced some early successes, we are still at the beginning of our journey in transforming Wayfair’s model training. Currently, we are working to further improve the availability of different types of compute options, unlocking paths for models that leverage Spark, and streamlining model retraining and back-testing pipelines. From there we will also be investing in formal experiment tracking and hyperparameter tuning solutions. We look forward to sharing the continued evolution of our ML Platforms in the future.