In early August, the Data Science (DS) team put on their first DS-wide hackathon. The global hackathon event brought together Boston and Berlin based data scientists for one day of hacking and demos. The event challenged teams to think big about how algorithms can transform Wayfair’s business and help unveil unseen possibilities. Some successful tools and products on our site, like our Visual Search tool, started as hackathon projects! The hackathon is a fun challenge to try out new methods or algorithms, explore a different part of the business, and collaborate with team members across disciplines.

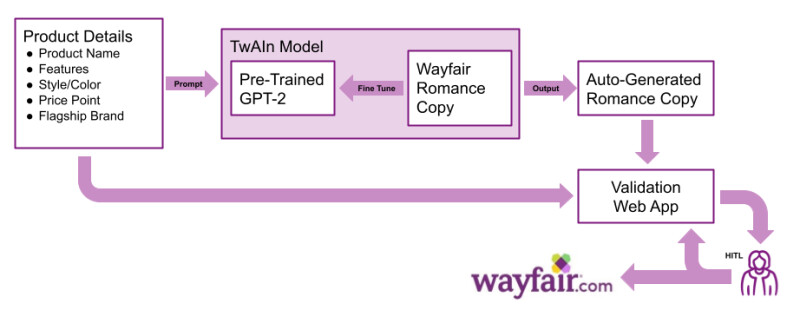

For the Hackathon, our four-person team sought to build a new solution for product merchandising, auto generating product descriptions with OpenAI’s GPT models. We named our final project TwAIn: The Voice of Wayfair in a Generative Text Model. It was great to experiment with a new type of model that we had never used before, and apply it to solve a trickier business problem, and our team ultimately won the hackathon’s People’s Choice Award.

At Wayfair, we refer to product descriptions as “Romance Copy” or “Marketing Copy”. We have a lot of factual information about the products we sell (think key-value pairs), so romance copy exists to offer more colorful descriptions that help convey style and feel to our customers, which can be tricky in an all-online marketplace.

So we know that romance copy is great, but why should we try to auto-generate these descriptions? When suppliers upload products into our catalog, they sometimes leave out details, including romance copy. This means that some products on Wayfair.com don’t have descriptions, which can impact our customer experience, and potentially impact those products’ SEO. Missing descriptions is only part of the problem though. For some of our products that we know will perform well, Wayfair has a team that manually rewrites product descriptions to better fit their style and brand. By filling in those missing descriptions and applying top-tier branding across all our products, we think we can make a big impact.

Working with Text Generation

This problem of filling in or auto-branding product descriptions can be tackled in a couple of ways. We could easily build a formula for a certain type of product, like Area Rugs, where noun or adjective fields would be randomly selected from predefined lists, like style, material, or color. The formula, however, wouldn’t transfer well to a different type of product, like Sofas. Generative text models tackle this scalability problem, and can be trained on multiple product types, languages, and brands. Generative text also makes sure that our descriptions sound less generic or formulaic.

To tackle the generative text problem, we turned to OpenAI’s GPT models. GPT-2 and GPT-3 are pre-trained, unsupervised generative text models, trained on a next-word prediction task. Given an input sequence or prompt, the models will iteratively predict the next word, writing sentences, paragraphs, or essays, depending on the prompt. The pre-trained model can be utilized through Zero/One/Few-Shot learning, or through Fine Tuning.

For our Wayfair use case, we decided to utilize fine tuning on the pre-trained model, paired with one-shot learning. We wanted to bootstrap the model’s knowledge of the English language with knowledge of the home furnishing space, and also teach the model the “Wayfair Branding” that we hope to impart on the text we generate.

We had one day to build our solution for the hackathon, so we decided to focus on a single class of products — Area Rugs. We used the widely-available pre-trained GPT-2 model from huggingface.co on a GPU box with a pytorch backend, and fine-tuned the model using existing romance copies on the Wayfair site. We formatted each training example to include the product details followed by the special token [SEP] and then the romance copy. In the interest of time, we spent about 3 hours training the model on 10k examples, for one epoch.

Once the fine-tuning had been completed, we got to work tweaking our inputs to the model.

Because GPT models are unsupervised, we didn’t need to provide the model with input-output pairs — the art of working with this type of model is in crafting the perfect prompt format to get the type of output you’re looking for. When we started experimenting with different prompts, we found that the inputs made a drastic difference in the correctness and grammaticality of the outputs.

Our first attempts at a prompt failed pretty spectacularly:

![input = 'Large Area Rug, hand woven, wool [SEP]'

output = 'in dyed is wool The. Turkey inovenw- hand is rug areaThis [SEP] Rug Area ,ovenW- Hand, Rug Area , wool, Wool,ovenW- Hand, Rug Area wool, woven hand, Rug AreaLarge'](https://cdn.aboutwayfair.com/dims4/default/14c267c/2147483647/strip/true/crop/1252x194+0+0/resize/800x124!/format/jpg/quality/90/?url=https%3A%2F%2Fcdn.aboutwayfair.com%2F66%2Faf%2F080759af438b9f42bfbee2d7f204%2Fscreen-shot-2021-06-03-at-9.03.36%20AM.png)

![input = 'Convert short description to long paragraph: Large Area Rug, hand woven, wool'

output = ' is and wool% 100 of made is rug The. woven machine is and wool% 100 of made is rug The. home your to addition great a is rugThis [SEP] wool, woven hand, Rug Area Large: paragraph long to description shortvertCon'](https://cdn.aboutwayfair.com/dims4/default/fc6f926/2147483647/strip/true/crop/1248x272+0+0/resize/800x174!/format/jpg/quality/90/?url=https%3A%2F%2Fcdn.aboutwayfair.com%2F95%2F21%2F5b60b6c346dfad13de1de0d8684e%2Fscreen-shot-2021-06-03-at-9.03.51%20AM.png)

But eventually we got the hang of it:

![input = 'Write a short paragraph about this rug: Large Area Rug, hand woven, wool [SEP]'

output = 'This collection features a traditional look and feel, woven with wool. This rug adds a modern feel to a home.'](https://cdn.aboutwayfair.com/dims4/default/91231eb/2147483647/strip/true/crop/1250x192+0+0/resize/800x123!/format/jpg/quality/90/?url=https%3A%2F%2Fcdn.aboutwayfair.com%2F21%2F33%2F80ec434d48f48a8f8cdecb4401d2%2Fscreen-shot-2021-06-03-at-9.04.09%20AM.png)

Since generative text models are unsupervised, we can’t use the standard machine learning (ML) metrics to measure the performance of the model, but we can use Rouge and Bleu scores for a similar use case. The Rouge score is a good proxy for recall, measuring how many words from the reference text are found in the machine generated text. Conversely the Bleu score, which measures precision, tells us how many words from the machine generated text are found in the reference text. To benchmark, we calculated the Bleu score for our samples, which was understandably low (approximately 1 out of every 100 examples had a non-zero score) given the limited training time of the model. We were able to show in the examples above, however, that even with limited training time and examples we are able to generate short descriptions of area rugs which included information provided in the prompt.

Checking our Work

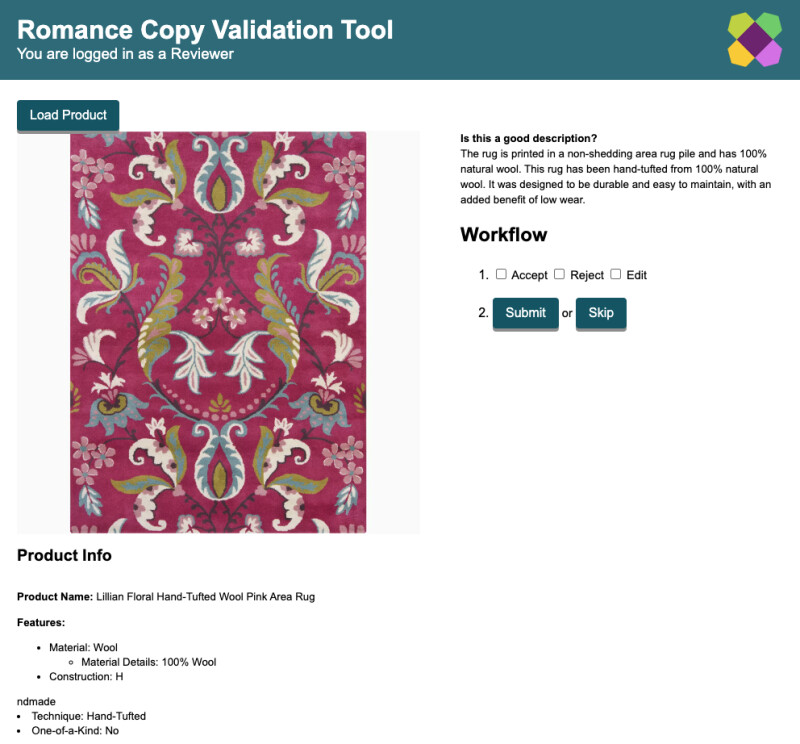

So we’ve generated romance copy for our products, now what? We check to make sure it’s good. While we can use metrics like Rouge and Bleu to get a general sense for how our model is performing, we want to be certain that the information we’re showing our customers is both accurate and appropriate. To do this, we need a human in the loop to validate and correct our descriptions before we feel comfortable putting them on our site.

Human in the loop workflows can be tricky and laborious, so we designed the solution to utilize a Google web app to streamline the process. This app would present reviewers with the product’s picture, details, and attributes, along with the auto-generated romance copy. The reviewer can then accept, reject, or edit the description, and note the reasoning for their decision.

We are always innovating

It was a fun and challenging day to build out this solution for the hackathon, but the work doesn’t stop here! We got a lot of interest in the TwAIn model, and are meeting with business teams to see how we can take this further. The team is excited to train the model longer, test and tweak prompts, migrate to GPT-3, and use our validation results to improve the quality and correctness of the end product. Stay tuned, we might soon have auto-generated product descriptions on our site — and hopefully you won’t even notice.

References:

[1] T. Brown et al. “Language Models are Few-Shot Learners”,

arXiv:2005.14165