Wayfair is an international company with a presence in multiple countries: US, Canada, UK, and Germany. And where our UK customer might look to purchase a “heater”, our US customer might use the term “radiator” to describe the same thing. We want to ensure we’re able to understand that both customers are referring to the same item and interpret feedback left by customers in the form of product reviews correctly.

Multiply this by the more than 31 million active customers Wayfair currently has, on top of written feedback in the millions, and it quickly becomes impossible to follow.

That is why we’ve set out to find a Natural Language Processing (NLP) technique that would help us address this challenge efficiently at scale. After conducting some research, we’ve found one insightful approach in the following paper: “ ExtRA: Extracting Prominent Review Aspects from Customer Feedback”.

Today we are proud to announce that thanks to a combination of efforts between our Data Science and Engineering teams, we’ve open-sourced our implementation of this paper which means that anyone can use this approach (the original paper didn’t provide code). The internal `extra-model` implementation has been used in production for over a year at Wayfair and is routinely used to analyze millions of reviews. We’re excited to have open-sourced our solution and to engage the larger Data Science community!

Motivation

First and foremost, we value any feedback our customers provide to us, be it reviews, return comments, NPS comments, customer-service-call transcripts, etc. We are committed to providing the best possible experience, and the easiest way to do so is to listen as keenly as possible to understand customer problems. We also want to make sure we’re fixing these issues as fast as possible, too. However, as mentioned above, doing this at scale is a challenge.

Here on the Tech Blog, we’ve written about BRAGI (our supervised BERT-based model) and how it helps us solve issues in this very domain. Why do we need another tool like `extra-model`?

The first difference between BRAGI and `extra-model` is that, technically, BRAGI is an example of a model that performs the Aspect Extraction NLP task, while `extra-model` is an example of an Aspect-Based Sentiment Analysis model. This is an important distinction because we want to understand multiple things: the contents of their written feedback, but also the sentiment our customers have expressed towards a particular topic. `extra-model` allows us to do both of these things.

Second, `extra-model` is entirely unsupervised. This means that we can pick up on an ever-changing landscape of customers’ feedback without tying ourselves to a taxonomy, as we did previously with BRAGI. For example, after running `extra-model` on return comments for certain items, we’re able to identify patterns across customer reviews that help us address product issues quickly. Unlocking this ability motivated us to add a way to run `extra-model` inside of the Customer Feedback Hub.

The final important difference is that `extra-model` performs semantic grouping. For example, it will group “heater” and “radiator” together, but it’ll also group together all different types of wood (oak, birch, cherry, maple, etc.) into the same group. This is a unique feature that we didn’t find in any other topic modelling approach we had previously researched ( LDA, BigARTM).

We don't limit ourselves to apply the described capabilities of ExtRA to internal stakeholders only: we also leverage it to improve the on-site experience for our customers directly. Specifically, `extra-model` is used as a backend for a user-facing feature that we call Bubble Filters, which looks like this:

We’ve run an A/B test on this experience and found Bubble Filters to be beneficial to our customers, as it allows for easier/faster scanning of Wayfair’s impressive catalog offerings to find an item that is just right.

Deep dive into `extra-model`

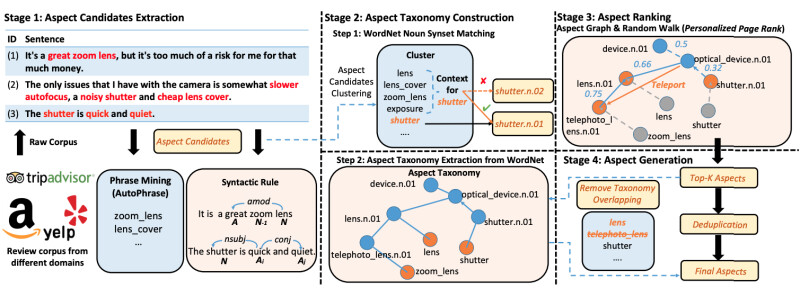

Now that we’ve established how `extra-model`is used at Wayfair, let’s dive deeper into how it actually works. The general overview of the framework is also available in the ExtRA paper:

Note that in our implementation we’ve chosen to drop the AutoPhrase step. In our experiments, we didn’t find it to be beneficial to our use-case, plus it greatly increased the technical complexity of the entire solution – it required Java to be installed on the machine running `extra-model`.

As an illustrative example, we’ll use a toy dataset consisting of 7 totally fictitious reviews:

`extra-model` consists of the following steps:

- Filtering

- Generate aspects

- Aggregate aspects into topics

- Analyze descriptors (adjectives)

- Link information

Let’s go over each of these steps and see what happens to the input at each stage.

Filtering

This is a general cleaning step that does following things:

- Removes empty text fields

- Requires at least 20 characters of text

- Remove non-printable unicode characters

- Filters for English language using Google's cld2 tool

In the example above, we are going to keep all 7 reviews since they satisfy all of the criteria.

Generate aspects

At this step, we extract all of the promising phrases. “Promising” for us includes any combination of “adjective + noun” (e.g., “great quality” or “solid wood”). We do so using the spaCy NLP tool.

In this running example we’re going to extract one phrase per review (e.g., “solid oak”, “solid birch” etc.). However, it is important to mention that in general reviews can (and very often do) mention multiple aspects.

Aggregate aspects into topics

Next, we take the output from the previous step and map it to WordNet. This step is non-trivial since almost every noun in the English language has multiple meanings (e.g., “leg” can be leg of a journey, leg of a person, or the leg of a table). Technical term for this is “word-sense disambiguation”. To overcome this, we construct pseudo-contexts for each aspect from other extracted aspects that are close in an abstract embedding space. With this context in hand we try to find a matching meaning by converting both the context of an aspect and each explanation to vectors (we use GloVe embeddings for that), finding which of the meanings is closest to the aspect.

Once we know the meaning of the noun, we proceed to cluster aspects into topics using graph processing tools provided by the NetworkX Python package. This is the exact “magic” sauce that makes `extra-model` so unique compared to any other approach we’ve tried.

After this stage in our example, all of the different types of wood will be mapped to the “ wood” WordNet node.

Analyze descriptors

To cluster aspects into topics we use WordNet, but WordNet only works for nouns (or noun phrases). Therefore, we convert all adjectives to vectors (again, using GloVe embeddings) and then use constant radius clustering.

At the end of this stage in our example, all “solid” adjectives will be mapped to “solid”, but in a more realistic scenario we would map things like “fantastic”, “awesome”, “wonderful” to “great”. Together with the mapping of aspects to topics, we can vastly reduce the number of unique combinations, thus, making it much easier to spot patterns in customer feedback across various products and sources of feedback.

We also add the sentiment of the aspect via the vaderSentiment Python package. The approach we use is very straightforward -- since we require each “phrase” to have an adjective associated with an aspect, we can use the sentiment of this adjective to assign a sentiment to the phrase.

Link information

You can see the full output of `extra-model` for our above example here. Below is what we think is the most important part of the output, namely, the mapping of aspects to topics and WordNet nodes:

At its completion, we’ve enriched each of the reviews we’ve used with semantic data that not only maps types of woods to their place in WordNet, but has also semantically combined them all into one big group. We can use this information in any downstream application, just like we’ve done in our Feedback Hub and our Bubble Filters feature.

We want your contribution!

Hopefully our deep dive has helped you generate a ton of ideas around how you can use `extra-model` in your projects. If so, you are in luck! As mentioned, `extra-model` is completely open-source! Feel free to use it for any purpose you see fit. The best place to start is our Quick Start Guide. If you have any questions, add an issue to our tracker and we’ll respond as soon as we can. Better yet, feel free to take a look at our repository and contribute to making `extra-model` great.

Finally, if you’ve found everything that we’ve gone through interesting, give us a star on GitHub and, of course, feel free to contribute!

In conclusion, we’d like to express our gratitude to Chris Antonellis from the Python Platforms team who partnered with us through the Auxiliary Engineering program at Wayfair. We would also like to thank Natali Vlatko from the Open Source Program Office. This effort would not have been possible without the help of these wonderful people!