Stylistic preference is an important factor when a home goods customer is deciding which product to buy, but it is very difficult to identify and define. Although designers have established different style categories, labeling a scene as adhering to a particular style is a highly subjective task. Furthermore, customers often cannot verbalize their style preferences, but can identify their preferences by looking at images. Thus, it is crucial to show products in a room context that are tailored to a customer's taste.

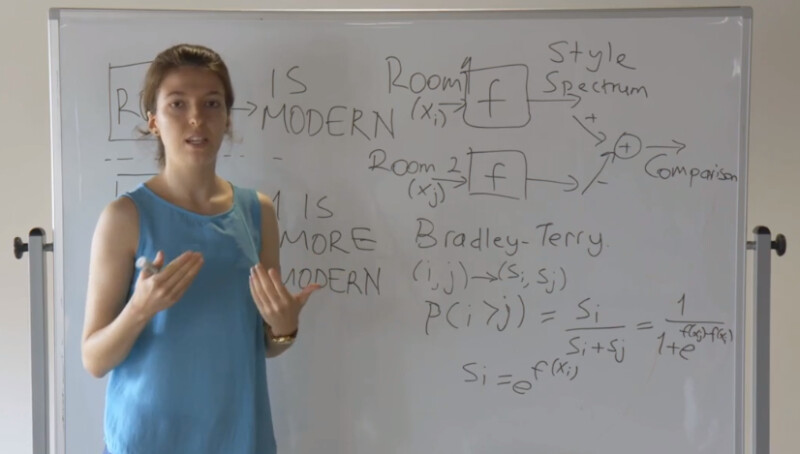

This week in Wayfair Data Science’s explainer series, Computer Vision intern Ilkay Yildiz discusses the Room Style Estimator (RoSE) framework at Wayfair. For this model, Wayfair’s Computer Vision team collects a dataset of room images labeled by interior design experts which encounter high inter-expert variability in style labels. We then overcome this limitation by generating comparisons, each indicating the relative order between a pair of images with respect to a style. We present a deep learning based room image retrieval framework to predict style from the generated comparisons. Given a seed room image, the RoSE framework predicts the style spectrum and provides a ranked list of stylistically similar room images from the catalog. Our architecture is inspired by siamese networks and extends the Bradley-Terry model to learn from comparisons.

Ilkay Yildiz is a PhD student in the Electrical and Computer Engineering Department of Northeastern University. She completed an internship this summer on Wayfair’s Computer Vision team. Her PhD project is on learning from comparison labels for accurate and fast detection of the Retinopathy of Prematurity disease, one of the leading causes of childhood blindness. Her research interests span ranking and preference learning, deep learning, optimization, probabilistic modeling, and computer vision. In her spare time, she enjoys playing the violin and swimming.