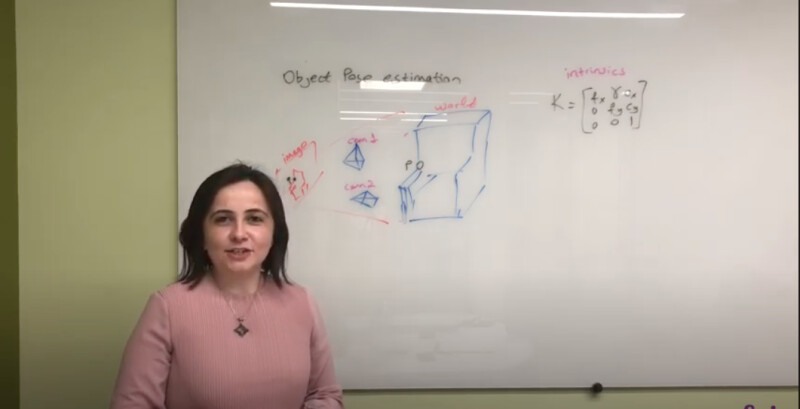

This week in Wayfair Data Science’s explainer series, we’re discussing object pose estimation, an important problem in robotics and augmented reality (AR) applications. In robotics, when given a 3D model of an object a mobile robot must be able to localize it in space in order to manipulate it. This localization process is also central to our AR work at Wayfair. On the Wayfair app, you can explore how our products look in your room using AR. The ability to estimate the pose of the selected item while you are moving your smartphone around your room is essential to providing the best AR experience. In this video, Wayfair data science manager Esra Cansizoglu explains how we solve this problem using perspective-n-point algorithm in a RANSAC framework.

Esra Cansizoglu has a PhD in electrical engineering from Northeastern University and currently works as a data science manager at Wayfair. Her team tackles computer vision problems and she has a particular interest in any kind of information that can be extracted from images in various domains: from recognizing style from room images to localizing objects for robotic perception. Originally from Turkey, she has been living in Boston area for more than a decade.